CSV (csvkit) and JSON (jq)

Working with CSV and JSON files (using csvkit and jq)

Marek Šuppa

Ondrej Jariabka

Adrián Matejov

Why CSV and JSON for Data Science?

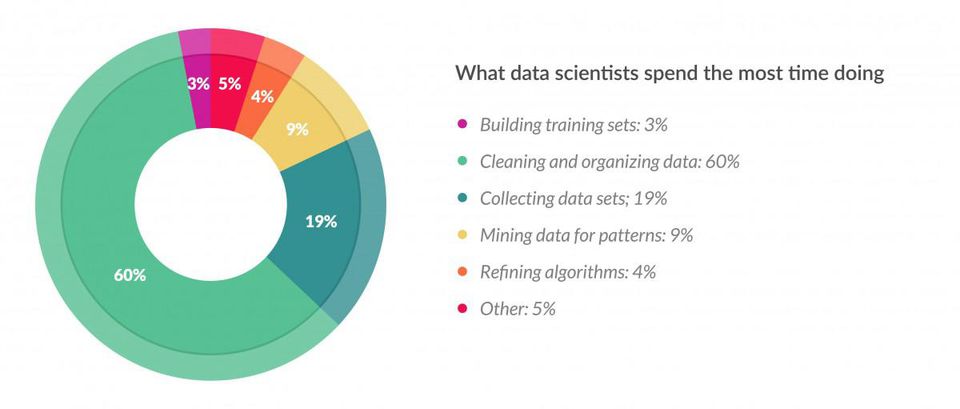

If you end up doing something close to Data Science, chances are you will spend a good chunk of your time cleaning data.

Why CSV and JSON for Data Science? II

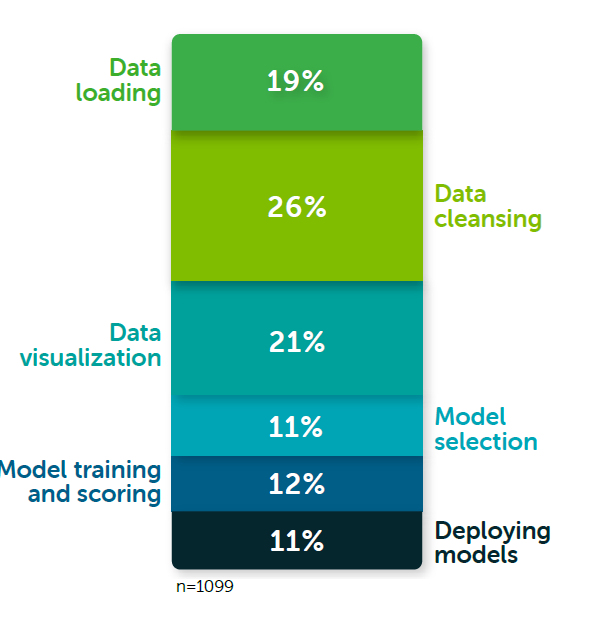

Situation may have changed, but data wrangling is a significant part of Data Scientist's job:

Thinking about your current job, how much of your time is spent in each of the following tasks?

Anacodna, 2020 (n=1,099)

Why CSV and JSON for Data Science? III

Data is generally stored in various file formats:

Plaintext (

.txt)Spreadsheet-like (

.tsv,.csv,.xls,.xlsx...)Streaming data (

.json,.jsonl)Columnar data store (

.parquet,.orc)

Why CSV and JSON for Data Science? III

Data is generally stored in various file formats:

Plaintext (

.txt)Spreadsheet-like (

.tsv,.csv,.xls,.xlsx...)Streaming data (

.json,.jsonl)Columnar data store (

.parquet,.orc)

The standard Unix toolking work very nicely with plaintext files.

Today, we'll discuss the tools for working with two other, very often used file

formats: CSV and JSON.

CSV

Comma Separated Values

Comma Separated Values (CSV)

Easily the most often used data-storage format.

Comma Separated Values (CSV)

Easily the most often used data-storage format.

For some, the name is "the whole standard". Just ponder on it:

A file format

For storing values

Separated by commas

$ cat cars.csv 1997,Ford,E3502000,Mercury,Cougar1996,Jeep,Grand CherokeeComma Separated Values (CSV)

Easily the most often used data-storage format.

For some, the name is "the whole standard". Just ponder on it:

A file format

For storing values

Separated by commas

$ cat cars.csv 1997,Ford,E3502000,Mercury,Cougar1996,Jeep,Grand CherokeeSo far so good, we could even process this with standard Unix tools (like awk).

Comma Separated Values (CSV)

Easily the most often used data-storage format.

For some, the name is "the whole standard". Just ponder on it:

A file format

For storing values

Separated by commas

$ cat cars.csv 1997,Ford,E3502000,Mercury,Cougar1996,Jeep,Grand CherokeeSo far so good, we could even process this with standard Unix tools (like awk).

But problems arise very soon. How would we add a car with , in is name?

Comma Separated Values (CSV)

Easily the most often used data-storage format.

For some, the name is "the whole standard". Just ponder on it:

A file format

For storing values

Separated by commas

$ cat cars.csv 1997,Ford,E3502000,Mercury,Cougar1996,Jeep,Grand CherokeeSo far so good, we could even process this with standard Unix tools (like awk).

But problems arise very soon. How would we add a car with , in is name?

Turns out we'll need to start escaping values, by wrapping them in quotes (").

$ cat cars-comma.csv1997,Ford,E3502000,Mercury,Cougar1996,Jeep,Grand Cherokee1999,Chevy,"Special edition, very large"Comma Separated Values (CSV) II

What if we had quotes in the name (")?

Comma Separated Values (CSV) II

What if we had quotes in the name (")?

Well, we'll have to wrap the whole line in quotes, and duplicate the actual quotes.

$ cat cars-comma-quotes.csv 1997,Ford,E3502000,Mercury,Cougar1996,Jeep,Grand Cherokee1999,Chevy,"Special edition, very large"1999,Chevy,"Large, ""extra"" special"Comma Separated Values (CSV) II

What if we had quotes in the name (")?

Well, we'll have to wrap the whole line in quotes, and duplicate the actual quotes.

$ cat cars-comma-quotes.csv 1997,Ford,E3502000,Mercury,Cougar1996,Jeep,Grand Cherokee1999,Chevy,"Special edition, very large"1999,Chevy,"Large, ""extra"" special"This is no longer processable with "standard" Unix tooling (like awk).

Comma Separated Values (CSV) III

CSV is a textbook example of how not to design a textual file format. Its problems begin with the case in which the separator character (in this case, a comma) is found inside a field. The Unix way would be to simply escape the separator with a backslash, and have a double escape represent a literal backslash.

... because the format rules are complex and underspecified, different implementations diverge in their handling of edge cases [...] Microsoft has incompatible versions of CSV files between its own applications, and in some cases between different versions of the same application.

Comma Separated Values (CSV) III

CSV is a textbook example of how not to design a textual file format. Its problems begin with the case in which the separator character (in this case, a comma) is found inside a field. The Unix way would be to simply escape the separator with a backslash, and have a double escape represent a literal backslash.

... because the format rules are complex and underspecified, different implementations diverge in their handling of edge cases [...] Microsoft has incompatible versions of CSV files between its own applications, and in some cases between different versions of the same application.

Handling these edge cases is why we need CSV-specific tooling.

csvkit is currently one of the best options.

csvkit

csvkit is a suite of command-line tools for converting to and working with CSV, the king of tabular file formats.

csvkit

csvkit is a suite of command-line tools for converting to and working with CSV, the king of tabular file formats.

It includes commands like

in2csv(to convert various tabular data formats into CSV)csvlook(visualize the tabular data in terminal)csvstat(print summary statisics of the input)csvcut(likecutbut for CSV columns)csvsort(likesortbut for tabular data)csvgrep(CSV specific regular expression matching)

The dataset

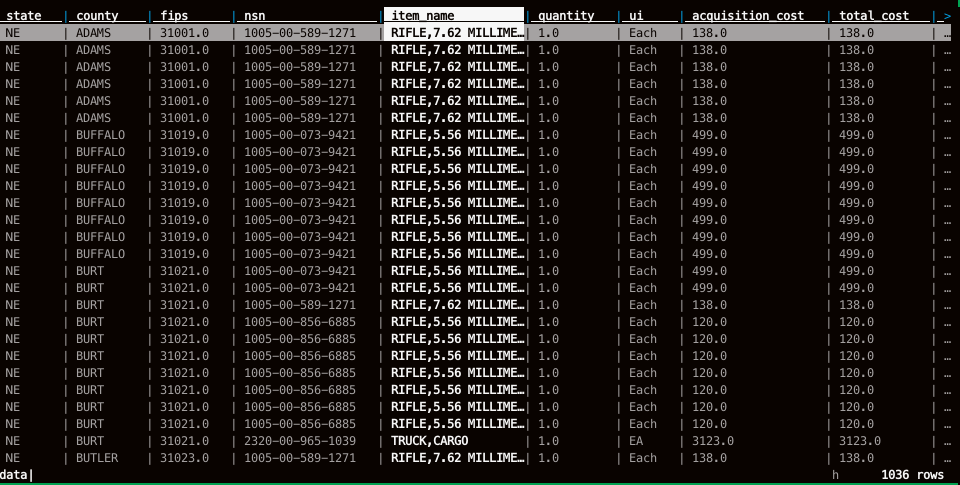

United States Defense Logistic Agency Law Enforcement Support Office’s (LESO) 1033 Program dataset

$ curl -L -O https://raw.githubusercontent.com/wireservice/csvkit/master/examples/realdata/ne_1033_data.xlsx`in2csv

Who wants to wait for Excel to load

As the name suggests, in2csv takes files in various formats and converts them to .csv.

--

We are most interested in the .xlsx to .csv conversion:

$ in2csv ne_1033_data.xlsx > data.csv--

To check that we did not mess it up, let's take a look at first few lines of the result:

$ head data.csvstate,county,fips,nsn,item_name,quantity,ui,acquisition_cost,total_cost,ship_date,federal_supply_category,federal_supply_category_name,federal_supply_class,federal_supply_class_nameNE,ADAMS,31001.0,1005-00-589-1271,"RIFLE,7.62 MILLIMETER",1.0,Each,138.0,138.0,2008-07-11,10.0,WEAPONS,1005.0,"Guns, through 30 mm"NE,ADAMS,31001.0,1005-00-589-1271,"RIFLE,7.62 MILLIMETER",1.0,Each,138.0,138.0,2008-07-11,10.0,WEAPONS,1005.0,"Guns, through 30 mm"NE,ADAMS,31001.0,1005-00-589-1271,"RIFLE,7.62 MILLIMETER",1.0,Each,138.0,138.0,2008-07-11,10.0,WEAPONS,1005.0,"Guns, through 30 mm"NE,ADAMS,31001.0,1005-00-589-1271,"RIFLE,7.62 MILLIMETER",1.0,Each,138.0,138.0,2008-07-11,10.0,WEAPONS,1005.0,"Guns, through 30 mm"NE,ADAMS,31001.0,1005-00-589-1271,"RIFLE,7.62 MILLIMETER",1.0,Each,138.0,138.0,2008-07-11,10.0,WEAPONS,1005.0,"Guns, through 30 mm"NE,ADAMS,31001.0,1005-00-589-1271,"RIFLE,7.62 MILLIMETER",1.0,Each,138.0,138.0,2008-07-11,10.0,WEAPONS,1005.0,"Guns, through 30 mm"NE,BUFFALO,31019.0,1005-00-073-9421,"RIFLE,5.56 MILLIMETER",1.0,Each,499.0,499.0,2008-09-24,10.0,WEAPONS,1005.0,"Guns, through 30 mm"NE,BUFFALO,31019.0,1005-00-073-9421,"RIFLE,5.56 MILLIMETER",1.0,Each,499.0,499.0,2008-09-24,10.0,WEAPONS,1005.0,"Guns, through 30 mm"NE,BUFFALO,31019.0,1005-00-073-9421,"RIFLE,5.56 MILLIMETER",1.0,Each,499.0,499.0,2008-09-24,10.0,WEAPONS,1005.0,"Guns, through 30 mm"csvcut

Like cut, but for CSV files.

Show me just the first, second and fifth columns

$ csvcut -c 1,2,5 data.csv | headstate,county,item_nameNE,ADAMS,"RIFLE,7.62 MILLIMETER"NE,ADAMS,"RIFLE,7.62 MILLIMETER"NE,ADAMS,"RIFLE,7.62 MILLIMETER"NE,ADAMS,"RIFLE,7.62 MILLIMETER"NE,ADAMS,"RIFLE,7.62 MILLIMETER"NE,ADAMS,"RIFLE,7.62 MILLIMETER"NE,BUFFALO,"RIFLE,5.56 MILLIMETER"NE,BUFFALO,"RIFLE,5.56 MILLIMETER"NE,BUFFALO,"RIFLE,5.56 MILLIMETER"csvcut II

csvcut can also (strangely and non-UNIX-y) show the columns

$ csvcut -n data.csv 1: state 2: county 3: fips 4: nsn 5: item_name 6: quantity 7: ui 8: acquisition_cost 9: total_cost 10: ship_date 11: federal_supply_category 12: federal_supply_category_name 13: federal_supply_class 14: federal_supply_class_namecsvlook

As you saw, just cating the output is not really all that readable.

$ csvcut -c 1,2,4 data.csv | head | csvlook| state | county | nsn || ----- | ------- | ---------------- || NE | ADAMS | 1005-00-589-1271 || NE | ADAMS | 1005-00-589-1271 || NE | ADAMS | 1005-00-589-1271 || NE | ADAMS | 1005-00-589-1271 || NE | ADAMS | 1005-00-589-1271 || NE | ADAMS | 1005-00-589-1271 || NE | BUFFALO | 1005-00-073-9421 || NE | BUFFALO | 1005-00-073-9421 || NE | BUFFALO | 1005-00-073-9421 |csvstat

.left-eq-larger-column[

$ csvcut -c 1,2 data.csv | csvstat 1. "state" Type of data: Text Contains null values: False Unique values: 1 Longest value: 2 characters Most common values: NE (1036x) 2. "county" Type of data: Text Contains null values: False Unique values: 35 Longest value: 10 characters Most common values: DOUGLAS (760x) DAKOTA (42x) CASS (37x) HALL (23x) LANCASTER (18x)Row count: 1036]

.right-eq-smaller-column[

- Allows you to get a quick look at what sort of data are we looking at

]

csvstat II

.left-eq-larger-column[

$ csvcut -c county,ship_date data.csv | csvstat 1. "county" Type of data: Text Contains null values: False Unique values: 35 Longest value: 10 characters Most common values: DOUGLAS (760x) DAKOTA (42x) CASS (37x) HALL (23x) LANCASTER (18x) 2. "ship_date" Type of data: Date Contains null values: False Unique values: 84 Smallest value: 2006-03-07 Largest value: 2014-01-30 Most common values: 2013-04-25 (495x) 2013-04-26 (160x) 2008-05-20 (28x) 2012-04-16 (26x) 2006-11-17 (20x)Row count: 1036]

.right-eq-smaller-column[

Infers the datatype of a column

Gives you a rundown of what to expect

]

csvstat III

.left-eq-larger-column[

$ csvcut -c quantity data.csv | csvstat 1. "quantity" Type of data: Number Contains null values: False Unique values: 28 Smallest value: 1 Largest value: 200 Sum: 2,915 Mean: 2.814 Median: 1 StDev: 10.669 Most common values: 1 (966x) 25 (8x) 2 (6x) 10 (6x) 8 (5x)Row count: 1036]

.right-eq-smaller-column[

For Numbers, it computes some of the statistics we'd expect:

- smallest/largest value

- sum/mean/median/stdev

- most common values

]

csvgrep

Let's look all items that came to the county of LANCASTER

$ csvcut -c county,item_name,total_cost data.csv | csvgrep -c county -m LANCASTER| county | item_name | total_cost || --------- | ------------------------------ | ---------- || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | LIGHT ARMORED VEHICLE | 0 || LANCASTER | LIGHT ARMORED VEHICLE | 0 || LANCASTER | LIGHT ARMORED VEHICLE | 0 || LANCASTER | MINE RESISTANT VEHICLE | 412,000 || LANCASTER | IMAGE INTENSIFIER,NIGHT VISION | 6,800 || LANCASTER | IMAGE INTENSIFIER,NIGHT VISION | 6,800 || LANCASTER | IMAGE INTENSIFIER,NIGHT VISION | 6,800 || LANCASTER | IMAGE INTENSIFIER,NIGHT VISION | 6,800 |csvsort

$ csvcut -c county,item_name,total_cost data.csv | csvgrep -c county -m LANCASTER | csvsort -c total_cost -r | csvlook| county | item_name | total_cost || --------- | ------------------------------ | ---------- || LANCASTER | MINE RESISTANT VEHICLE | 412,000 || LANCASTER | IMAGE INTENSIFIER,NIGHT VISION | 6,800 || LANCASTER | IMAGE INTENSIFIER,NIGHT VISION | 6,800 || LANCASTER | IMAGE INTENSIFIER,NIGHT VISION | 6,800 || LANCASTER | IMAGE INTENSIFIER,NIGHT VISION | 6,800 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | RIFLE,5.56 MILLIMETER | 120 || LANCASTER | LIGHT ARMORED VEHICLE | 0 || LANCASTER | LIGHT ARMORED VEHICLE | 0 || LANCASTER | LIGHT ARMORED VEHICLE | 0 |class: middle, center, inverse

JSON

JSON

stands for JavaScript Object Notation

is a lightweight format for storing and transporting data

is often used when data is sent from a server to a web page

is "self-describing" and easy to understand

--

{ "name": "Buster", "breed": "Golden Retriever", "age": 4, "is_a_dog": true, "owner": { "name": "Sally" }, "likes": [ "bones", "balls", "dog biscuits" ], "siblings": null}jq

jq is a command line JSON processor.

$ cat dog.json{ "name": "Buster", "breed": "Golden Retriever", "age": 4, "is_a_dog": true, "owner": { "name": "Sally" }, "likes": [ "bones", "balls", "dog biscuits" ], "siblings": null}--

$ cat dog.json | jq .{ "name": "Buster", "breed": "Golden Retriever", "age": 4, "is_a_dog": true, "owner": { "name": "Sally" }, "likes": [ "bones", "balls", "dog biscuits" ], "siblings": null}jq filtering

.left-eq-column[

$ cat dog.json | jq .{ "name": "Buster", "breed": "Golden Retriever", "age": 4, "is_a_dog": true, "owner": { "name": "Sally" }, "likes": [ "bones", "balls", "dog biscuits" ], "siblings": null}]

.right-eq-column[

jq can nicely filter out values attached to concrete keys

$ cat dog.json | jq '.name'"Buster"It can also filter multiple keys passed at the same time:

$ cat dog.json | jq '.breed,.age'"Golden Retriever"4It can also work with arrays;

$ cat dog.json | jq '.likes[0]'"bones"]

CSV and JSON conversion

csvkit also has a nice tool to converte between the two: csvjson

$ csvcut -c county,item_name,total_cost data.csv | csvgrep -c county -m LANCASTER | csvsort -c total_cost -r | csvjson --indent 4 | head -n 11[ { "county": "LANCASTER", "item_name": "MINE RESISTANT VEHICLE", "total_cost": 412000.0 }, { "county": "LANCASTER", "item_name": "IMAGE INTENSIFIER,NIGHT VISION", "total_cost": 6800.0 },