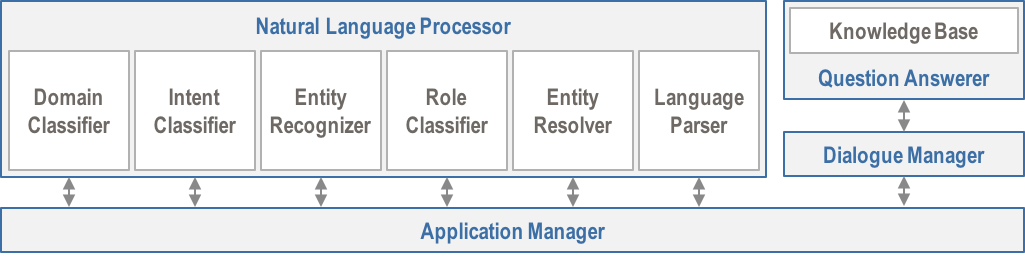

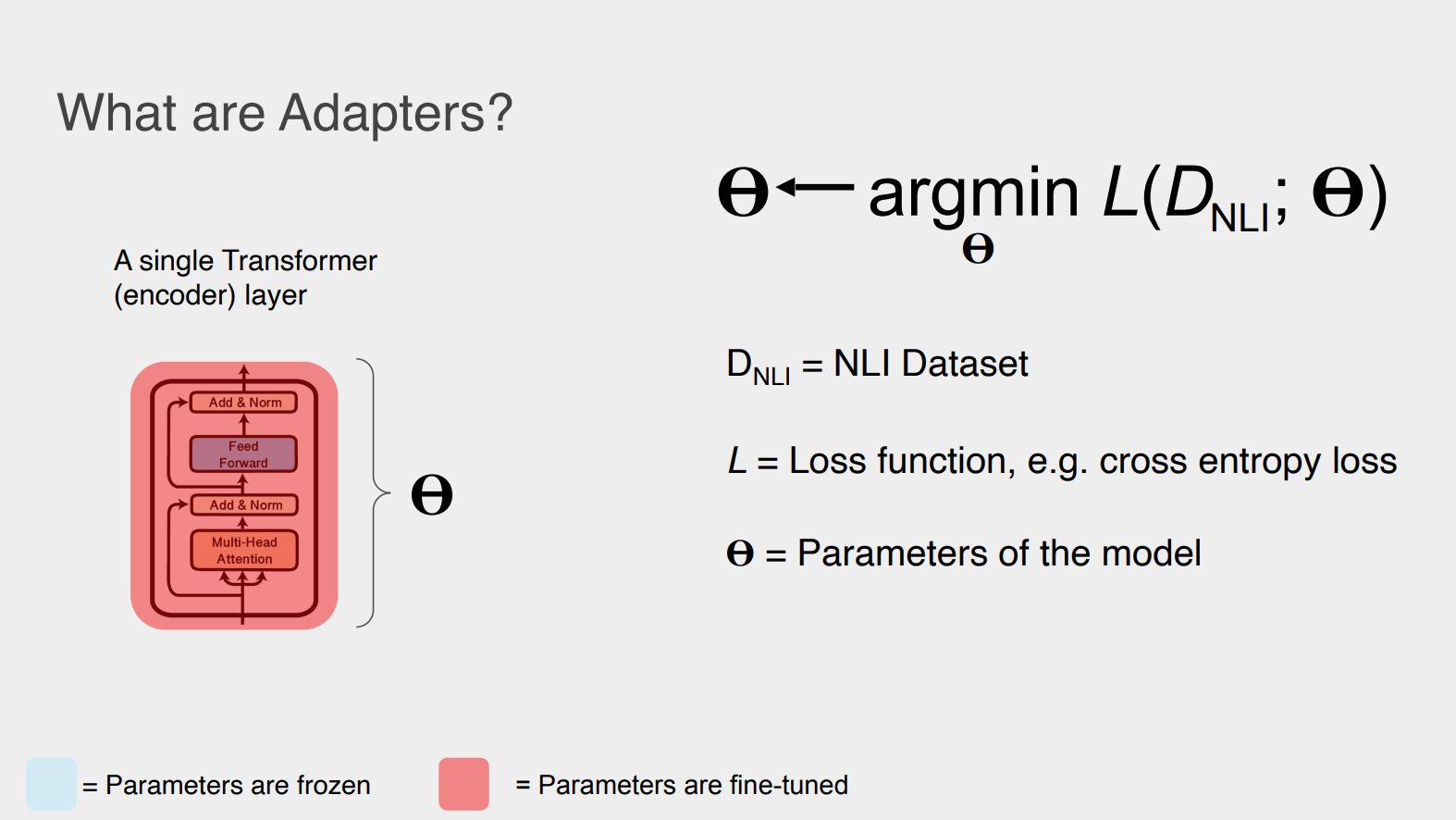

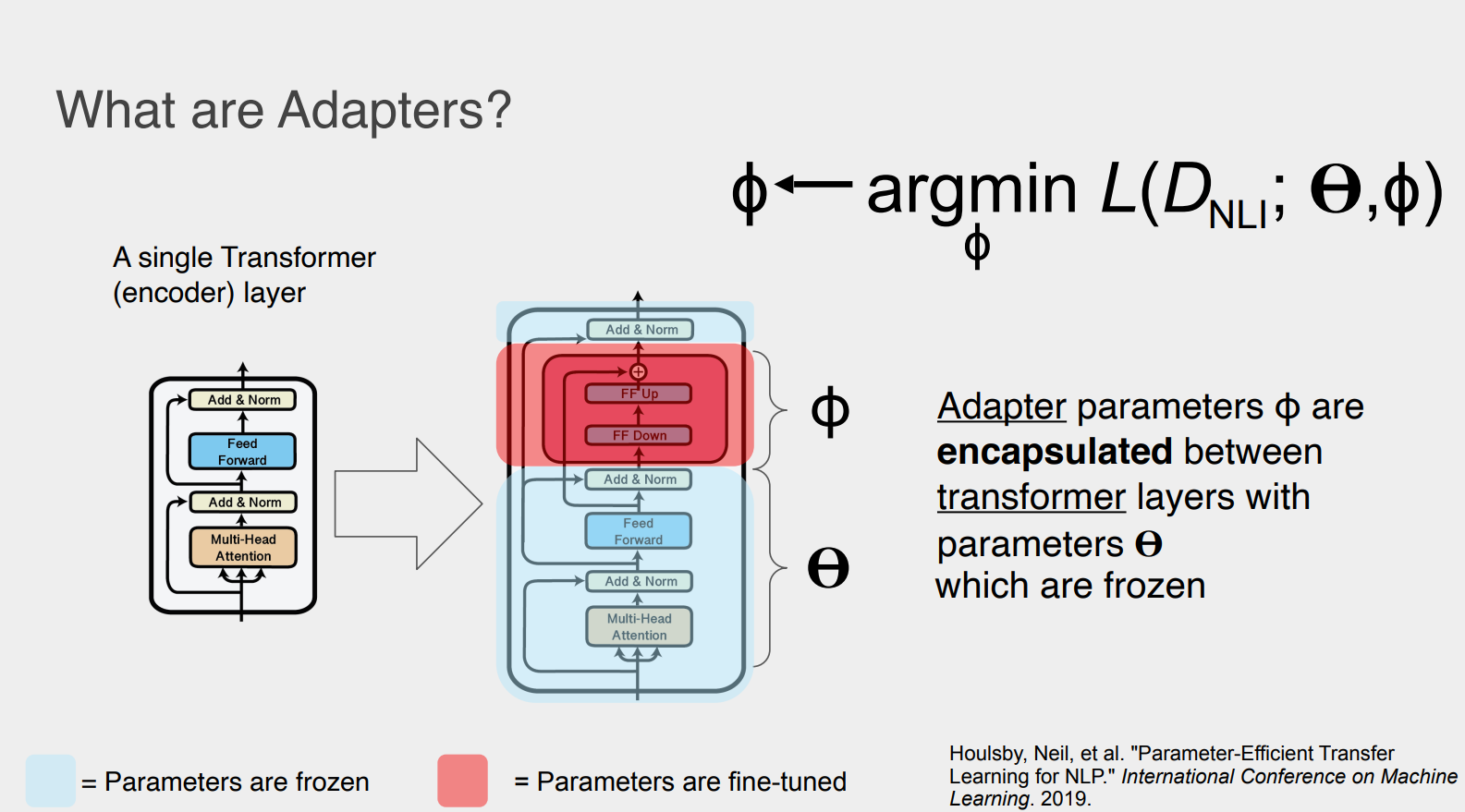

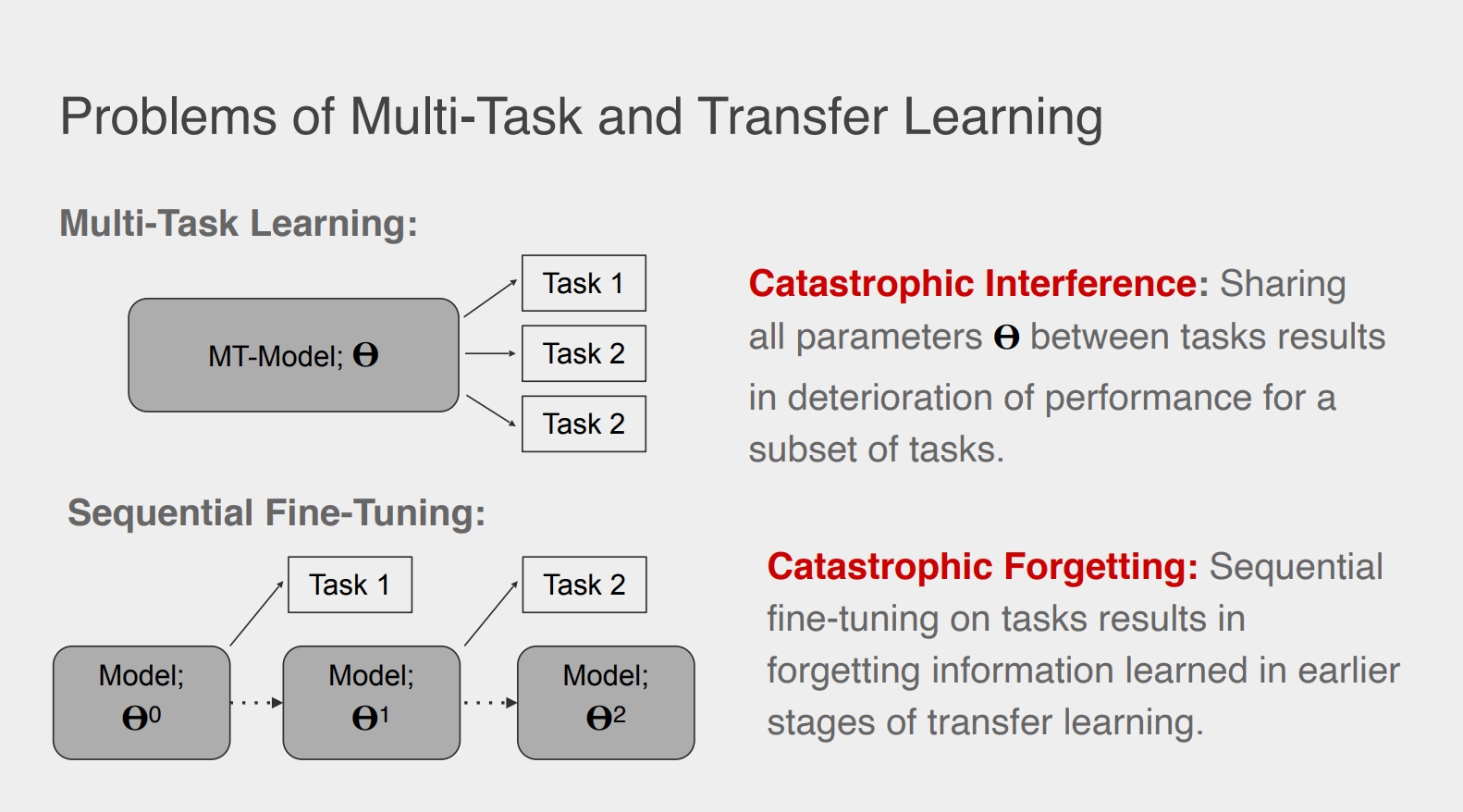

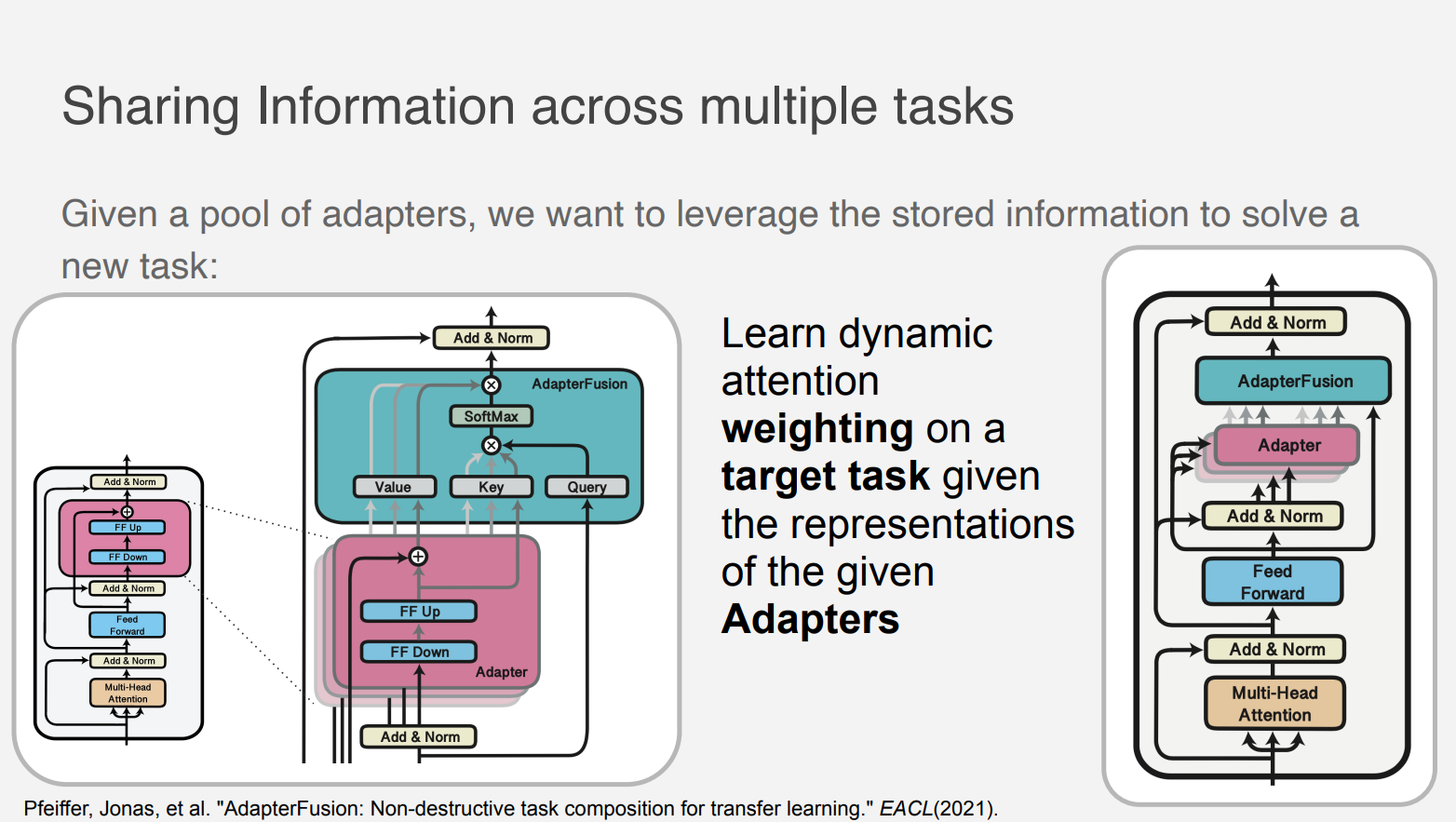

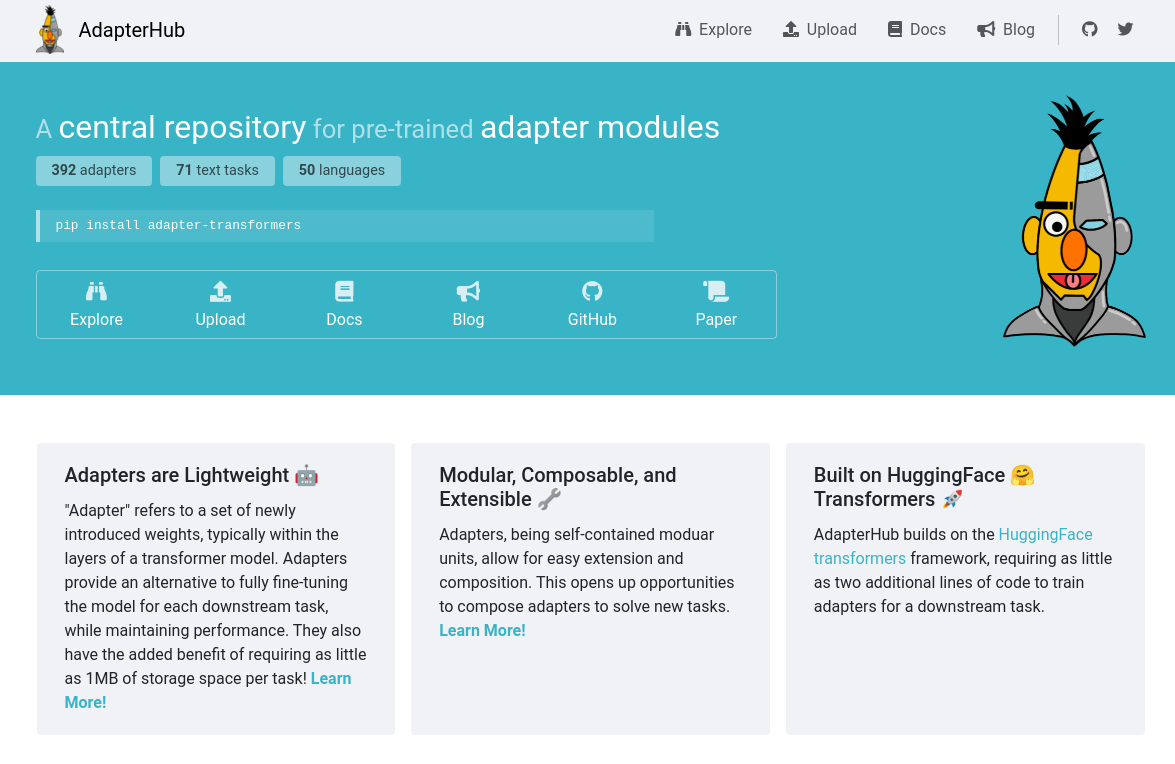

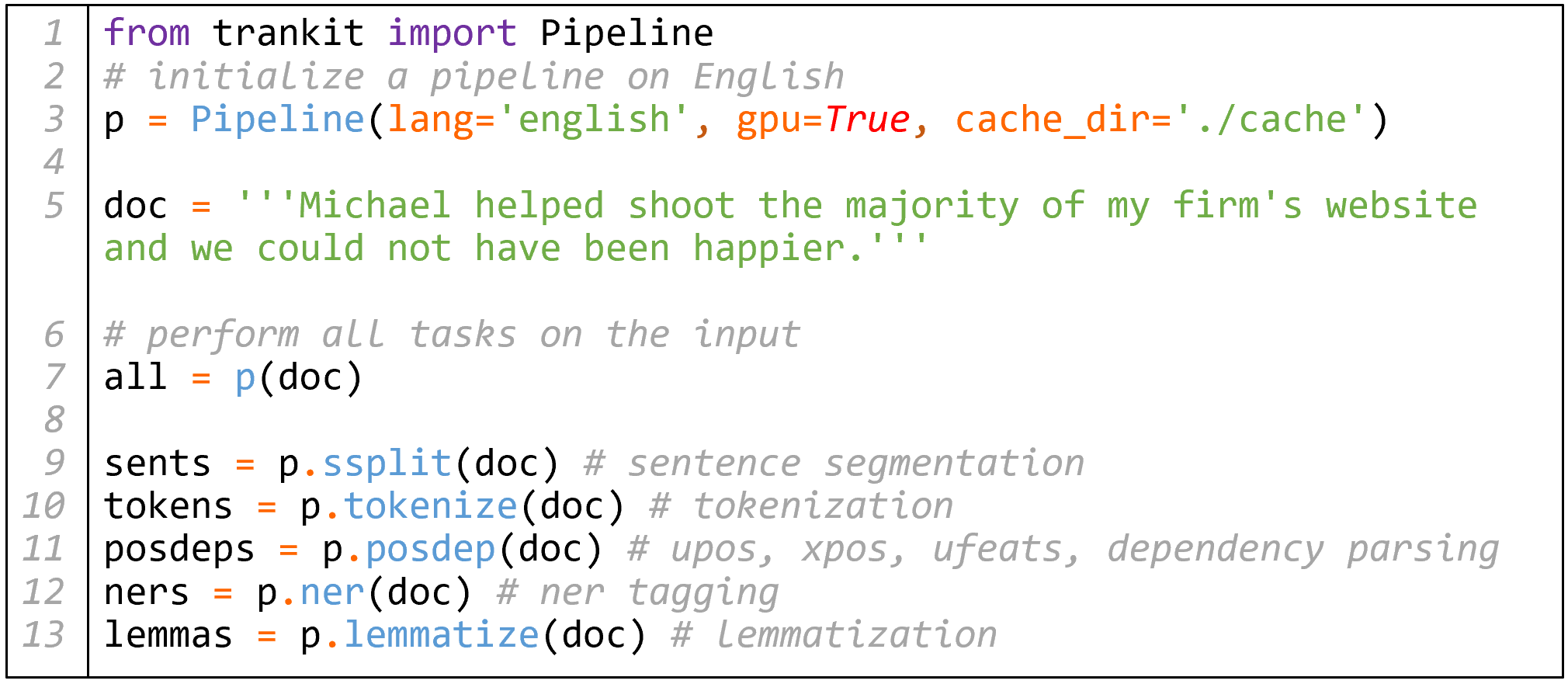

name: inverse layout: true class: center, middle, inverse --- # Adapters A neat (and production-enabling) trick for multi-task and multi-lingual NLP .footnote[Marek Šuppa<br />PyData Global<br />2021] --- layout: false # How do you solve a NLP task in 2021? -- 1. Go to https://huggingface.co/models -- 2. See if you can find a trained model that solves a similar task -- 3. If not, take `(AL)BERT`, `BART`, `T5` or `GPT-2` -- 4. Fintetune (only the top layers / heads) -- 5. Maybe unfreeze some more if you feel like it -- 5. You're done! --- # Solving multi-task NLP problems in 2021 1. Go to https://huggingface.co/models -- 2. See if you can find a trained model that solves one of the tasks -- 3. If not, take `(AL)BERT`, `BART`, `T5` or `GPT-2` -- 4. Fintetune (only the top layers / heads) for this specific task -- 5. Repeat 2. to 4. for all tasks you have -- If each task is a separate model though, the resulting package is going to be big to massive (even small models like `TinyBERT`)  --- # *Finetuning* vs. Adapters  .center[.font-small[From [*Adapters in Transformers*](http://lxmls.it.pt/2021/wp-content/uploads/2021/07/Iryna_Jonas_Adapters-in-Transformers.pdf) by Jonas Pfeiffer and Iryna Gurevych]] --- # Finetuning vs. *Adapters*  .center[.font-small[From [*Adapters in Transformers*](http://lxmls.it.pt/2021/wp-content/uploads/2021/07/Iryna_Jonas_Adapters-in-Transformers.pdf) by Jonas Pfeiffer and Iryna Gurevych]] --- # Multi-task Problems  .center[.font-small[From [*Adapters in Transformers*](http://lxmls.it.pt/2021/wp-content/uploads/2021/07/Iryna_Jonas_Adapters-in-Transformers.pdf) by Jonas Pfeiffer and Iryna Gurevych]] --- # Multi-task Problems: AdapterFusion  .center[.font-small[From [*Adapters in Transformers*](http://lxmls.it.pt/2021/wp-content/uploads/2021/07/Iryna_Jonas_Adapters-in-Transformers.pdf) by Jonas Pfeiffer and Iryna Gurevych]] --- # AdapterHub  .center[https://adapterhub.ml/] --- # AdapterHub `adapter-transformers` are a drop-in replacement for HuggingFace Transformers, installable by ``` pip install adapter-transformers ``` -- -------------------- Doing inference is also quite easy then: ```python model = AutoModelForSequenceClassification.from_pretrained("bert-base-uncased") model.load_adapter("sentiment/sst-2@ukp") model.set_active_adapters("sst-2") ``` -- The adapter is quite small -- just 3MB! -- ```python tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased") tokens = tokenizer.tokenize("AdapterHub is awesome!") input_tensor = torch.tensor([ tokenizer.convert_tokens_to_ids(tokens) ]) outputs = model(input_tensor) ``` --- # AdapterHub: Training Similar story, just a few changes compared to a "standard" pipeline: ```python model = AutoModelWithHeads.from_pretrained('roberta-base') model.add_adapter("sst-2") model.train_adapter("sst-2") ``` -- The last line freezes the Transformer parameters (except for the Adapter) -- ```python model.add_classification_head("sst-2", num_labels=2) model.set_active_adapters("sst-2") # ... other config settings would go here trainer.train() ``` -- Once the training is done, we can easily save the Adapters separately: ```python model.save_all_adapters('output-path') ``` More at https://adapterhub.ml --- # Adapters in action: `trankit` .left-eq-column[ - A spaCy alternative - Each part of a pipeline in each language is its own Adapter ] .right-eq-column[ - Provides 90 new pretrained pipelines for 56 languages - Very small model sizes ] --  ``` pip install trankit ``` --- # Closing thoughts -- - If you are working on multi-task and/or multilingual NLP, give Adapters a try -- - If you do, feel free to reach out -- I'd be very interested to hear how it went! .center[ <br /> <br /> <br /> .font-xx-large[**[mareksuppa.com](https://mareksuppa.com)**] ] --- # References - **Adapters in Transformers by Jonas Pfeiffer and Iryna Gurevych** ([pdf](http://lxmls.it.pt/2021/wp-content/uploads/2021/07/Iryna_Jonas_Adapters-in-Transformers.pdf)) - **AdapterFusion: Non-Destructive Task Composition for Transfer Learning** ([video](https://slideslive.com/embed/presentation/38954355)) ([paper](https://aclanthology.org/2021.eacl-main.39.pdf)) - **MAD-X: An Adapter-Based Framework for Multi-Task Cross-Lingual Transfer** ([video](https://slideslive.com/38938991/madx-an-adapterbased-framework-for-multitask-crosslingual-transfer)), ([paper](https://aclanthology.org/2020.emnlp-main.617/)) - **[AdapterHub](https://adapterhub.ml/): A Framework for Adapting Transformers** ([paper](https://arxiv.org/abs/2007.07779)) - **[Trankit](https://github.com/nlp-uoregon/trankit): A Light-Weight Transformer-based Toolkit for Multilingual Natural Language Processing** ([paper](https://arxiv.org/pdf/2101.03289.pdf))